Training Mario with Reinforcement Learning

Date Created: November 12, 2024

Date Modified:

In a lovely day, I asked myself, how can I make a computer learn to play Mario? Well, I did just that, and I run on this journey to understand reinforcement learning (RL) better. This blog post documents my experiments with training an AI agent to play Super Mario Bros using a Double Deep Q-Network (DDQN).

What is Reinforcement Learning?

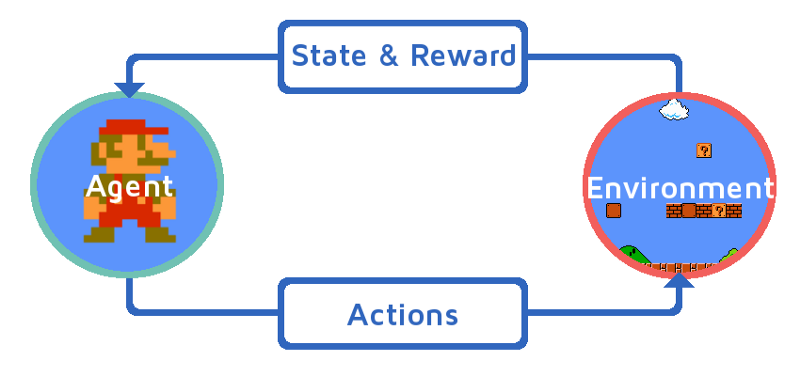

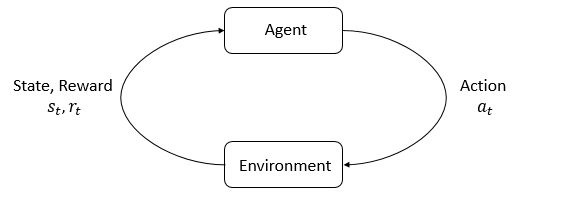

Reinforcement learning (RL) is a type of machine learning where an agent learns by interacting with its environment to achieve a specific goal. The agent takes actions and receives rewards or penalties based on the effectiveness of those actions. Over time, the agent uses this feedback to adjust its behavior, aiming to maximise cumulative rewards.

RL is commonly used in gaming, robotics, finance, or any setting where sequential decision-making under uncertainty is required. For example, an RL agent can learn to play video games by trying different strategies and learning from the outcomes. A famous example is AlphaGo, which used RL to beat world champions in the game of Go. There are also power agents in games like AlphaStar in StarCraft and OpenAI Five in Dota 2 to play at superhuman levels.

In the case of Mario, the agent's actions are moving left or right, jumping, or shooting fireballs.

The basic elements of reinforcement learning include:

- Agent: The learner or decision-maker that interacts with the environment.

- Environment: The setting in which the agent operates.

- Action: Choices the agent can make.

- State: The current situation or context in which the agent finds itself.

- Reward: Feedback given to the agent to indicate success or failure.

"For any given state, an agent can choose to do the most optimal action (exploit) or a random action (explore)." This is something that agent has to learn to make better decision. This trade-off between exploration and exploitation is a key challenge in reinforcement learning.

Core Concepts

Imagine you're playing a video game. You are the agent (the character you control), and the environment is the game world (the levels, obstacles, and everything around you). You can see things happening in the game (like a monster coming toward you) and decide what to do next (maybe jump, run away, or fight back), which is the environment.

States and Observations

The state is like a snapshot of everything in the game world at a specific moment. For example, where the monsters are, what items are around, and how much health you have. Observations are what the agent can see or know about the world. Sometimes you can see everything (like in a game where you can see the whole map), and sometimes you can only see part of it (like if you're inside a building in the game and can't see the outside). This means the state is the complete picture (fully observed if agent can see the state) while the agent can only see part of it (agent can only see a part of the state), most of the time.

Action Space

Action space is the set of all possible things you can do in the game. For example, you might have options like "jump", "run", or "attack". These are the actions the agent can choose from.

In some environments, such as Atari games and Go, the agent operates within discrete action spaces, meaning there are a limited number of possible actions it can take. Other environments, like where the agent controls a robot in a physical world, have continuous action spaces. In continuous spaces, actions are real-valued vectors.

Policies

A policy is like a set of rules or a plan that tells the agent what to do based on what it sees. So, if you see a monster, your policy might tell you to jump, or if it is attacking you first, you fight back. If you see a treasure, your policy might tell you to collect it.

Since the policy acts as the agent's brain, the terms "policy" and "agent" are often used interchangeably. For example, one might say, "The policy aims to maximise the reward."

In deep RL, we use parameterised policies. These policies are functions whose outputs depend on a set of parameters, such as the weights and biases of a neural network. In adjusting these parameters through optimisation algorithms, we can change the behavior of the policy.

A policy can be either deterministic or stochastic.

Trajectories

A trajectory is a sequence of states and actions in the world, like the path the agent takes through the game. It's the series of actions the agent takes, starting from the beginning until the end. It's like the story of what you do in the game.

Trajectories are also frequently called episodes or rollouts.

Rewards

The goal of Reinforcement Learning (RL) is for the agent to get better by practicing and learning from what happens when it takes different actions. The agent gets rewards for good actions and tries to do more of those to maximise its total reward.

The reward function, written as 𝑅 , tells the agent how good or bad a certain action was. This depends on: Where the agent was (the state it was in, like a level in a game), What action it just took (like moving, jumping, or grabbing something), Where it ended up (the next state, after the action). So we could write it as: 𝑅=𝑅(𝑠,𝑎,𝑠′)

Sometimes, though, it's simpler to look only at where the agent is now (or where it is and what action it took).

The agent doesn’t just care about the reward from one action—it cares about getting the most points or rewards over time. This total score over a period is called the return, written as R(τ). There are a couple of ways to look at return:

- Finite-Horizon Undiscounted Return: adding up all the points you get in a short level or time period in a game.

- Infinite-Horizon Discounted Return: look at all rewards the agent ever gets (an infinite horizon), but we use a discount factor (written as γ) to make future rewards worth a bit less.

Prioritise short-term gains (like going for treasures) but still consider discounted long-term rewards (by factoring in future treasures after defeating the monster). Plus, using a discount factor makes the math easier. For each decision, it might calculate a value estimate based on both immediate rewards and discounted future rewards. So, even if it avoids one monster initially for a quick treasure, it might tackle the next if it seems worthwhile.

Initial Setup

Note: This blog follows the instructions from this tutorial. Discussion and modifications that entails will be attempted to make sense of the article and the code.

Setting up the environment was quite the adventure. If you've worked with Python packages before, you know the usual suspects - version conflicts, deprecation warnings, and the occasional "this doesn't work like it used to" moments.

- Outdated components

- Compatibility issues because some functions are deprecated

- Getting the right combination of dependencies' versions

After a few hours of debugging and package juggling, I finally got everything working together.

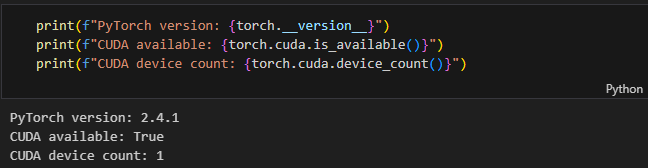

To list the whole dependencies I used would be a bit much, but here are the main ones:

pytorch=2.4.1=py3.8_cuda12.4_cudnn9_0

torchrl=0.5.0

torchvision=0.20.0

gym=0.26.1

gym-super-mario-bros=7.4.0

numpy=1.24.4

matplotlib-base=3.7.3

You can see that I used CUDA and cuDNN for GPU acceleration. To find the right versions, I recommend checking here, the official PyTorch website, or using miniconda conda-forge channel for up-to-date packages. Once you have the right versions, it will look something like this:

Initialise Environment

In Mario, tubes, mushrooms, etc... are the components of the environment. This is where the agent will interact with the game world, taking actions and receiving rewards based on its performance.

# Initialise Super Mario environment (in v0.26 change render mode to 'human' to see results on the screen)

if gym.__version__ < '0.26':

env = gym_super_mario_bros.make("SuperMarioBros-1-1-v0", new_step_api=True)

else:

env = gym_super_mario_bros.make("SuperMarioBros-1-1-v0", render_mode='rgb', apply_api_compatibility=True)

# Define the movement options for Super Mario

MOVEMENT_OPTIONS = [

["right"], # Move right

["right", "A"], # Jump right

]

# Apply the wrapper to the environment

env = JoypadSpace(env, MOVEMENT_OPTIONS)

env.reset()

next_state, reward, done, trunc, info = env.step(action=0)

print(f"{next_state.shape},\n {reward},\n {done},\n {info}")

This code snippet initialises the Super Mario environment, sets up the movement options, and applies a wrapper to the environment, which will helps the agent interact with the environment by providing a set of actions it can take. As a test, the code prints out the next state, reward, done flag, and info after taking the first action in the environment.

When you call env.step(action=0), you're telling Mario to perform action 0, which from the MOVEMENT_OPTIONS list is moving right. Of course, you can change the action to 1, which is a jump right. The function returns the next state (a 240x256x3 image), the reward (0.0 in this case), a done flag (False, meaning the episode is not over), and some additional information about the environment. This is something that Mario will probably see (for illustration purposes):

Think of it like pressing the right button for a split second, checks if you get a reward, game over or not, and then returns the new state of the game. This is a single step in the game, the interaction loop will continue until the game is over or the AI beat the game.

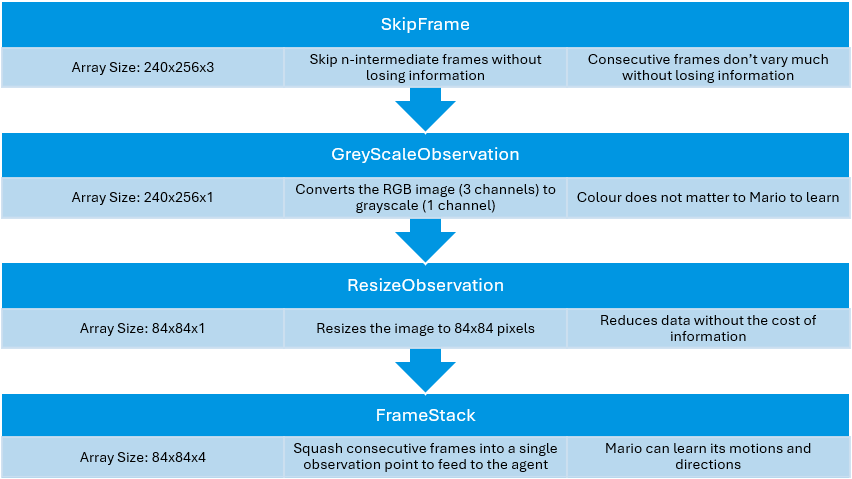

Pre-process the Environment

In the previous output, the next state was a 240x256x3 image which is returned by the environment. Often, this is too much information for the agent to process directly. Mario does not need to see the entire screen to make decisions. Instead, we will apply wrappers to the environment to pre-process the images and make them more manageable for the agent.

The final output is smaller by almost 85%, which means faster processing and less memory usage. This is much simpler than what a human sees, but contains all the essential information needed to learn how to play the game effectively.

Replay Buffer

A replay buffer is like a "memory bank" that stores the agent's experiences while it plays the game. Each experience consists of: The current state (what Mario sees), the action taken, the reward received, the next state, and whether the game ended (done flag). Something like this:

In this tutorial, the author uses TensorDictReplayBuffer with LazyMemmapStorage, which is a custom replay buffer implementation. The replay buffer stores experiences (state, action, reward, next state, done) and samples mini-batches for training the agent. Only, I failed to get it running.

Apparently, this is a Windows-specific error occurs when the system tries to allocate more virtual memory than is available. Since I don't want to mess with the system too much and I don't have any money for RAM yet, I decided to use a simpler replay buffer implementation and called it SimpleReplayBuffer.

| SimpleReplayBuffer | TensorDictReplayBuffer with LazyMemmapStorage |

|---|---|

| Uses simple Python deque for storage | More sophisticated memory management |

| Implements basic prioritised experience replay | Uses memory mapping for large datasets |

| Straightforward memory management | More complex data structures |

| Less feature-rich but more robust | More features but more potential points of failure |

My SimpleReplayBuffer does 2 key things:

- push (Storing Experiences):

- It takes a snapshot of what happened during one step of Mario's gameplay.

- It calculates how important this memory is (priority = |reward| + epsilon).

- It stores the experience in a deque and the priority in a separate deque.

- sample (Prioritised Sampling):

- Think of this like Mario "remembering" past experiences to learn from. He will takes in how many memories to recall (batch_size) and prefers to remember important moments (high rewards).

- If picking importance memories failed (due to zero probabilities), it will fallback to random memories.

- Then, it converts chosen memories to format for learning (GPU tensors).

Even though it is essentially the same with TensorDictReplayBuffer, the SimpleReplayBuffer is actually a better solution for my current setup. It is lightweight, easy to understand, and doesn't require any special dependencies. Plus, it is easier to debug and modify if needed.

It's-a me, Mario!

It's time create the Mario-playing agent. Basically, Mario should be able to:

- Act according to the optimal policy (exploit) or try new things (explore) based on the current state.

- Remember experiences. Experience = (current state, current action, reward, next state). Mario will cache and recall these experiences to update his policy.

- Learn from these experiences, which means updating his policy to maximise rewards over time.

Act

def act(self, state):

"""

Given a state, choose an epsilon-greedy action and update value of step.

Inputs:

state(``LazyFrame``): A single observation of the current state, dimension is (state_dim)

Outputs:

``action_idx`` (``int``): An integer representing which action Mario will perform

"""

The act function takes a state, which is a LazyFrame object representing what Mario "sees" at a given moment. From there, it chooses an action based on an epsilon-greedy strategy (exploit vs. explore). If the agent is exploring (randomly trying new things), it will choose a random action. If the agent is exploiting (using what it knows to maximise rewards), it will choose the best action by relying on MarioNet in the Learn section.

The method is called every time Mario needs to make a decision, which happens many times per second during gameplay.

Exploration rate early on is 1, meaning Mario will explore 100% of the time early in training.

Over time, this rate will decrease through exploration_rate_decay, so Mario will explore less and force to exploit more.

This value will never goes below exploration_rate_min, which can be set.

Remember (Cache and Recall)

def cache(self, state, next_state, action, reward, done):

"""

Store the experience to self.memory (replay buffer)

Inputs:

state (``LazyFrame``),

next_state (``LazyFrame``),

action (``int``),

reward (``float``),

done(``bool``)

"""

def recall(self):

"""

Retrieve a batch of experiences from memory

"""

These 2 functions serve as the memory bank for Mario.

The cache function stores experiences (state, next state, action, reward, done) in the memory (replay buffer). The recall function randomly retrieves a batch of experiences from the replay buffer for training. This is where Mario "remembers" past experiences and uses them to learn.

There are 2 keys parameters to consider when caching and recalling experiences: the buffer size and the batch size.

The buffer size determines how many experiences Mario can remember at once. For Mario, each level lasts around 200-300 seconds. 50000 experiences should be enough to cover 150-250 full levels, making it a good starting point. If you have the computational resources, you can increase this number to remember more experiences -> diverse learning, important events are not forgotten.

The batch size determines how many experiences Mario will recall for training. A larger batch size means Mario can learn from more experiences at once, which can lead to more stable learning. However, larger batch sizes require more memory and computational resources, so it's a trade-off.

Learn

MarioNet

class MarioNet(nn.Module):

"""mini CNN structure

input -> (conv2d + relu) x 3 -> flatten -> (dense + relu) x 2 -> output

"""

The MarioNet class defines the neural network architecture that Mario uses to learn how to play the game. In this instance, we use Double Deep-Q Network. For clarity, the blog won't drill into the details. However, know that DDQN can provide a more stable training, better performance, and relatively simple to implement compare to DQN or Dueling DQN which is way more complex.

TD Estimate and Target (Temporal Difference Learning)

@torch.cuda.amp.autocast()

def td_estimate(self, state, action):

"""Compute current Q-value estimate"""

@torch.cuda.amp.autocast()

@torch.no_grad() # No gradients needed for target

def td_target(self, reward, next_state, done):

"""Compute TD target using DDQN"""

In short, TD learning is about estimating the value of a state-action pair and updating this estimate based on the reward and the expected value of the next state.

- TD Estimate (Current Guess):

- What Mario thinks the value of current state-action pair is

- Comes from online network (current knowledge)

- Like Mario saying "I think jumping here will give me X points"

- TD Target (Better Guess):

- What Mario should think the value is, based on immediate reward received and estimated future value from more stable target network.

- Comes from target network (more accurate)

- Like a teacher saying "Actually, jumping there gave you Y points plus future possibilities"

Over time, learning happens by minimising difference and Mario's estimates get closer to reality.

Model Updates (Training Process)

def update_Q_online(self, td_estimate, td_target):

loss = self.loss_fn(td_estimate, td_target) # How wrong was Mario?

self.optimizer.zero_grad() # Clear previous gradients

loss.backward() # Calculate gradients

self.optimizer.step() # Update network weights

return loss.item()

def sync_Q_target(self):

# Copy online network weights to target network

self.net.target.load_state_dict(self.net.online.state_dict())

These functions are responsible for updating the online network based on the TD estimate and TD target. It's like Mario's brain adjusting its understanding. The update_Q_online function calculates the loss between the TD estimate and TD target, backpropagates the loss to update the network weights, and returns the loss value. The sync_Q_target function, which will be called occasionally, synchronises the target network with the online network by copying the weights so that the target network is updated with the latest knowledge.

Learning Loop

def learn(self):

# Important timings

if self.curr_step < self.burnin: # First 5000 steps

return None, None # Just collect experiences

if self.curr_step % self.learn_every != 0: # Every 2 steps

return None, None # Skip learning

if self.curr_step % self.sync_every == 0: # Every 5000 steps

self.sync_Q_target() # Sync target network

if self.curr_step % self.save_every == 0: # Every 500,000 steps

self.save() # Save checkpoints

# Main learning steps

state, next_state, action, reward, done = self.recall() # Get batch

td_est = self.td_estimate(state, action) # Current guess

td_tgt = self.td_target(reward, next_state, done) # Better guess

loss = self.update_Q_online(td_est, td_tgt) # Learn

The learn function is the heart of the agent's learning process. It decides when to start learning, when to skip learning, and when to synchronise the target network.

- First 5000 steps: Just collect experiences

- Every 2 steps: Skip learning

- Every 5000 steps: Sync target network

- Every 500,000 steps: Save checkpoint

The flow would be something like this: Get Batch → Calculate Guess → Calculate Target → Update Network

Logging

For tracking and visualising the training process, the MetricLogger class is created. It is a comprehensive logging system used to track, and save, and visualise the agent's performance during training.

In essence, these metrics help understand the agent's learning:

- Increasing rewards indicate the agent is getting better

- Decreasing losses show stable learning, that the agent is learning from its mistakes

- Increasing Q-values show the agent is finding better strategies

- Episode lengths can indicate if Mario is surviving longer or completing levels faster

Evaluation

I have made a evaluation script in order to watch MarioNet plays. Because after all, the goal is to see Mario beat the game by itself, right?

The script runs in human-visible mode (render_mode="human") and records video, making it easy to analyse the agent's behavior.

The evaluation process is somewhat similar to the training process. The eval script still initiate environment setup and MarioNet, but it will load the checkpoint provided by the training process earlier. Then, we implement gameplay loop, run the Mario session played by the model, and record its performance. This is where you can see how well Mario is performing and if he's improving over time.

Mario Gameplay - Recorded on November 17, 2024

As you can see, he's stuck. But that's okay, it's part of the learning process. The agent will learn from these experiences and improve over time.

The script will track several metrics to demonstrate its playing styles and identify areas for improvement: Total steps taken, final x-position reached, chosen actions, whether Mario reached the flag, and average speed (pixels/step).

Training Process and Parameter Optimisation

Training a reinforcement learning agent to play Super Mario Bros is computationally intensive. With my RTX 3060 Ti 8GB GPU setup, I had to make several compromises and optimisations to make the training feasible. Here's what I learned from two different training approaches.

| Training Approach | Results |

|---|---|

| First Training Run: Fast but Limited |

|

| Second Training Run: Longer but More Complex |

|

# First Run (Aggressive)

batch_size = 32 # Smaller batch size for faster learning

exploration_rate_decay = 0.99999975 # Slower decay for more exploration

gamma = 0.90 # Higher discount factor for long-term rewards

learning_rate = 0.00025 # Faster or slower learning

SimpleReplayBuffer(100000, ...) # Larger buffer for more experiences

# Second Run (Conservative)

batch_size = 256 # Larger batch size for more stable learning

exploration_rate_decay = 0.99999

gamma = 0.95

learning_rate = 0.0005

SimpleReplayBuffer(50000, ...) # Smaller buffer for less memory usage

After messing around, here are what I conclude:

- Action Space Trade-off: The 2-action model (first run) performed well, if not matching the 6-action model. This suggests that for limited computational resources, a simpler action space might be more efficient.

- Behavioral Patterns: To be observed, since both models occasionally stuck in-place, indicating incomplete learning.

- Time: The 2-action model is so much faster. The 6-action model, while showed more diverse strategies, required significantly more training time to be optimal.

Certainly, I will continue to experiment with different hyperparameters and training strategies to improve the agent's performance. What I have in mind at first is using the 2-action model and train it in smaller episodes. I will also try to explore extreme parameters to force the model to converge faster, since hardware is limited in a way.

I will update with more findings in part 2 soon enough. In the mean time, you can check out this project in a concise manner or my other projects.